Deep (Energy) Takes: The Future of the US Grid and the Race for Energy Dominance

Hi Deep (Tech) Takes Friends! 🧑🔬👋

In the last post, I explained how NEPA, the law meant to protect the environment, is now one of the biggest obstacles to building clean energy in the U.S. Time delays, lawsuits, and endless red tape are slowing the transition, and unless we fix it, we won’t hit our climate or energy goals.

Today, we’re diving straight into the grid—the critical infrastructure at the heart of achieving energy abundance. This post breaks down the outdated structure and mounting challenges of the U.S. electrical grid, from neglected maintenance and instability caused by rapid solar and wind adoption to surging energy demand driven by electrification and AI data centers. The only viable path forward is a bold, China-style investment in nuclear, solar, wind, and long-duration energy storage to deliver reliable, low-cost power at scale.

The US Grid: Past, Present, and Current Challenges

The case for innovation—and long-duration energy storage (LDES)—becomes obvious once we break down how the grid actually works and what’s holding it back. Let’s begin with the fundamentals of how the U.S. electrical system is structured.

The grid is a top-down electrical system that has remained largely unchanged since the early 1900s. To understand its limitations, let’s walk through how electricity travels from the power plant to your home. It starts at generating stations, where power is produced. That electricity is then stepped up to high voltages and transmitted as alternating current (AC) through long-distance transmission lines.

Why does the modern grid rely on high voltages and AC? Nearly all generating stations use AC because direct current (DC) isn’t economical for long-distance transmission—typically losing efficiency beyond a mile. This is due to power loss in transmission lines, which follows the equation: Power Loss = I²R (since V = IR), where I is current, R is resistance, and V is voltage. The longer the wire, the more resistance and, therefore, the more power lost. By increasing voltage and reducing current, the grid minimizes energy loss over long distances.

Electricity is stepped up to higher voltages through a transformer. As shown in the diagram above, a transformer uses coils of wire to transfer energy between circuits. It works specifically with AC power, which oscillates back and forth, enabling the voltage to be stepped up or down. The number of coils in each winding determines the change in voltage—for example, if the secondary coil has five times as many windings as the primary, the output voltage will be five times higher. The combination of AC and transformers eliminated the need to build coal-fired power plants near population centers. It also made it possible to harness remote sources like hydropower and deliver electricity to customers hundreds of miles away.

Now, back to how electricity reaches your home. After traveling through long-distance transmission lines, electricity enters local distribution areas (LDAs) via step-down transformers that reduce the high voltages. From there, it flows through transmission-distribution (TD) interfaces and electricity meters before entering your home. Once it’s “behind the meter,” it powers everything from your laptop charger to your TV monitor.

Our top-down grid is designed for one-way electricity flow. As David Roberts aptly puts it, electricity moves through transmission lines like a river—flowing in a single direction and needing to be fully consumed at the end of the line.

Now that we understand how electricity travels from power stations to our homes, let’s look closely at how transmission networks are managed behind the scenes. Historically, the entire system was run by vertically integrated utility monopolies that controlled generation, transmission, and distribution. State public utility commissions regulated the rates they charged. This structure emerged to avoid duplicative infrastructure, manage high upfront investment costs, and take advantage of economies of scale. And for a while, it worked—electricity prices steadily fell through the 1960s, and the monopoly model went largely unchallenged.

The utility monopoly began to unravel after the 1973 energy crisis. Among the many reforms that followed, Section 210 of the Public Utility Regulatory Policies Act (PURPA) stood out. It required utilities to purchase power from smaller producers at a rate equivalent to what it would have cost the utility to generate it themselves—effectively guaranteeing these non-utility producers a market. This provision laid the foundation for an entire industry of independent power producers (IPPs). By the 1990s, more than half of the new electricity generation capacity added each year came from IPPs.

Several other major changes occurred during this era of unbundling transmission from generation. Utilities were required to open their transmission lines to all users and provide equal access—no preferential treatment allowed. To enforce this neutrality, utilities had to separate their transmission and generation operations. In many states, this led utilities to sell off their power plants entirely.

In the wake of these changes, Independent System Operators (ISO)—which don’t own any generation assets—managed the transmission lines for many utilities. ISOs are responsible for creating and operating competitive wholesale electricity markets. In ISO-controlled regions, generators bid to supply electricity, and the lowest-cost providers that meet demand win the auction. Because different generators come online at different times of day, electricity prices fluctuate accordingly. Today, roughly two-thirds of the U.S. is served through wholesale competition, while the rest remains under vertically integrated utility models.

The Three-Headed Hydra Undermining U.S. Grid Reliability

The urgent challenge facing our grid infrastructure is its declining ability to distribute power reliably. If you’re a regular deep (tech) takes reader in the U.S., chances are you’ve experienced at least one or two power outages lasting several hours each year. Three key forces are driving this decline: a lack of infrastructure maintenance, the rapid (and uncoordinated) adoption of renewables, and a sharp surge in energy demand—the likes of which we haven’t seen in decades.

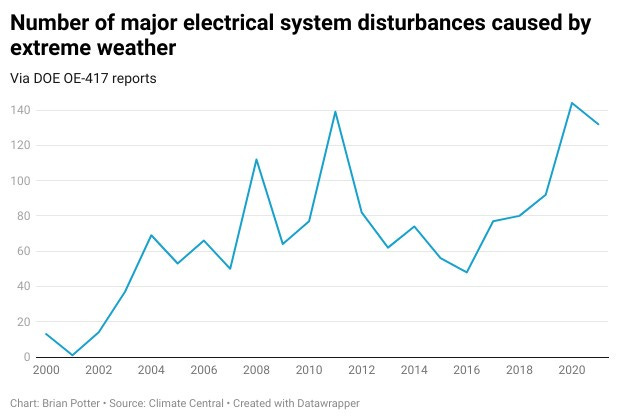

Let’s begin with the lack of infrastructure maintenance. Around 90% of U.S. power outages stem from failures in the distribution system—often triggered by extreme weather. High winds, storms, and wildfires routinely knock down voltage lines mounted on poles. Between 2000 and 2020, the number of major weather-related electrical disturbances surged from 10 to 140. Many of these could have been prevented with basic upkeep like tree-trimming—yet from 2000 to 2015, utility spending on distribution per mile fell by over 30%.

Extreme weather and poor grid maintenance are urgent concerns—but there’s another growing challenge. Ironically, the rapid adoption of solar and wind is making the grid more unstable, even as it moves us toward net zero. Firm energy sources like coal plants are being phased out and replaced by intermittent renewables, creating volatility in supply that the current grid isn’t equipped to handle.

So why is the grid adopting solar and wind despite the added instability? Simply put, customers are acting in their self-interest—choosing energy sources with zero marginal costs because they’re cheaper. While many frame the energy transition as a shift from carbon-based to zero-emission systems, I prefer how blogger Azeem Azhar puts it: the real transition is from energy as a commodity to energy as a technology.

If you look at the history of energy, sources like oil, natural gas, and coal have always been treated as commodities. Oil, for instance, is finite and unevenly distributed, with prices swinging unpredictably based on politics and global market dynamics—even as we discover new reserves and innovate extraction methods. By contrast, solar and wind technologies generate energy rather than extracting it, allowing them to benefit from rapid improvement driven by learning curves. Solar energy has an impressive annual learning rate above 20%, with wind slightly behind at around 15%. The only technologies I can think of that improve even faster are semiconductors and DNA sequencing, both near 45%!

The Duck Curve visually illustrates the challenges posed by high levels of solar and wind adoption. The load (blue line) represents total electricity demand throughout the day, while net load (green line) shows the demand minus solar and wind generation—indicating how much electricity must be provided by other sources. Solar energy, in particular, significantly reduces net demand during daylight hours but disappears entirely in the evening. As solar penetration increases, the daytime dips become deeper and the demand surges in the evening grow steeper.

Scott Bessent’s Wise Words: Increasing Energy Demand and the (Green) Energy Cold War

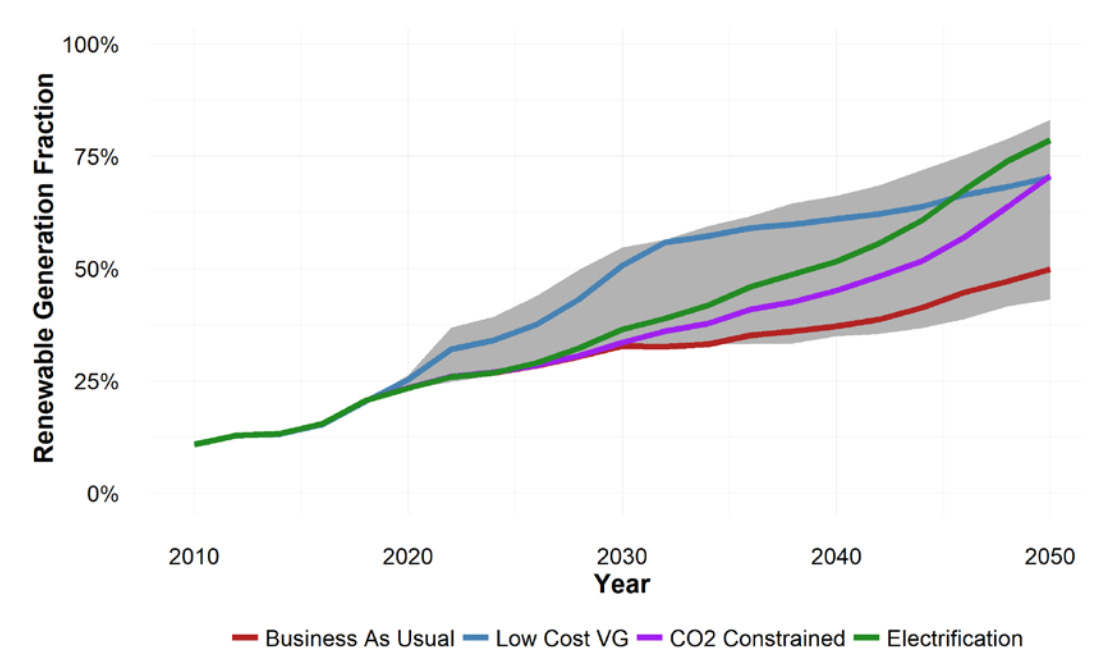

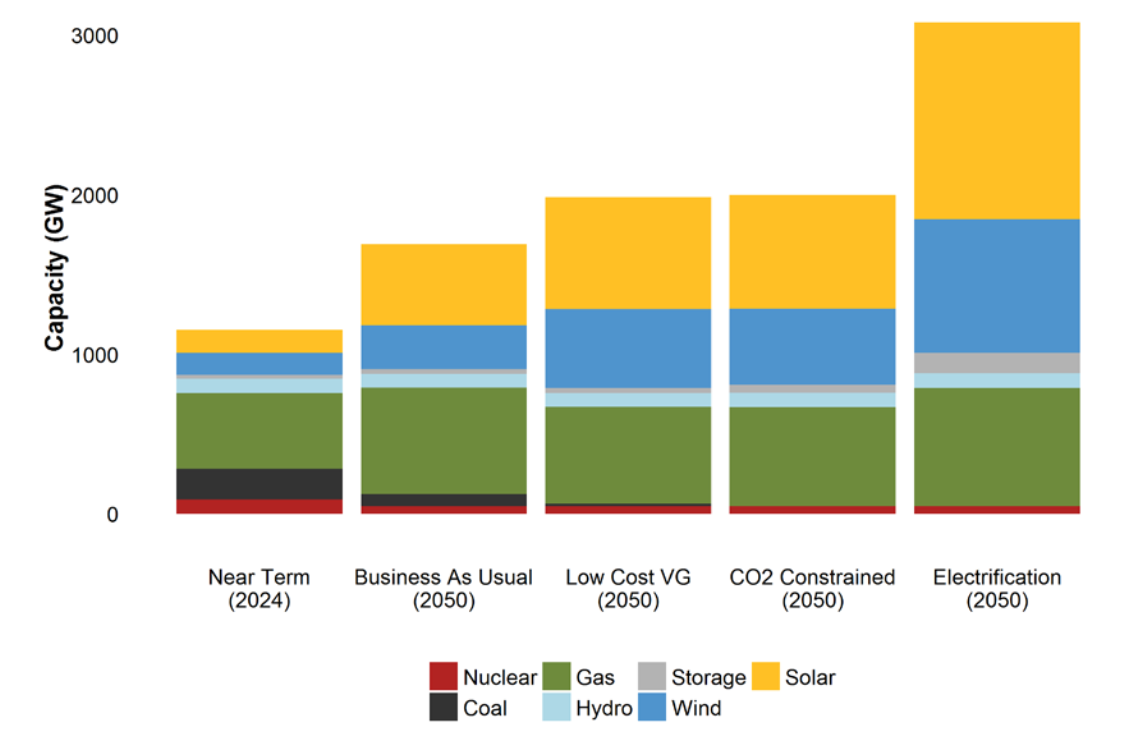

The third major challenge threatening U.S. grid reliability is the rapid growth in energy demand—something we haven’t experienced since the early 2000s. This surge is largely driven by the widespread electrification of energy uses: fossil fuel-powered vehicles, furnaces, stoves, and industrial equipment are increasingly being replaced with electric alternatives. The National Renewable Energy Laboratory’s (NREL) 2021 U.S. Renewable Integration Study estimates that achieving roughly 80% renewable generation through broad electrification will require approximately three times today's electricity generation capacity by 2050. Currently, we’re nowhere near the pace needed to meet this demand. Still, as I discussed in my previous post on NEPA, I remain cautiously optimistic that Trump’s recent energy executive orders may help accelerate capacity expansion.

What we didn’t anticipate was the massive rise in energy demand driven by data centers. Let's look at the numbers: Semianalysis, a leading semiconductor research firm, analyzed over 3,500 data centers across North America, including those currently under construction. They project that AI will push data centers to consume around 4.5% of global electricity generation by 2030. They also forecast that data center power demand will nearly double—from about 49 GW in 2023 to 96 GW by 2026—with AI workloads accounting for roughly 40% of that demand.

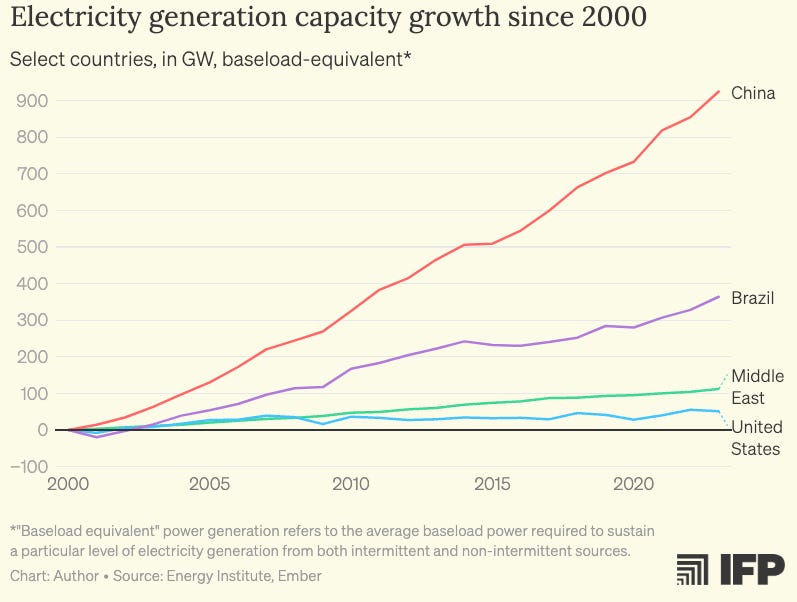

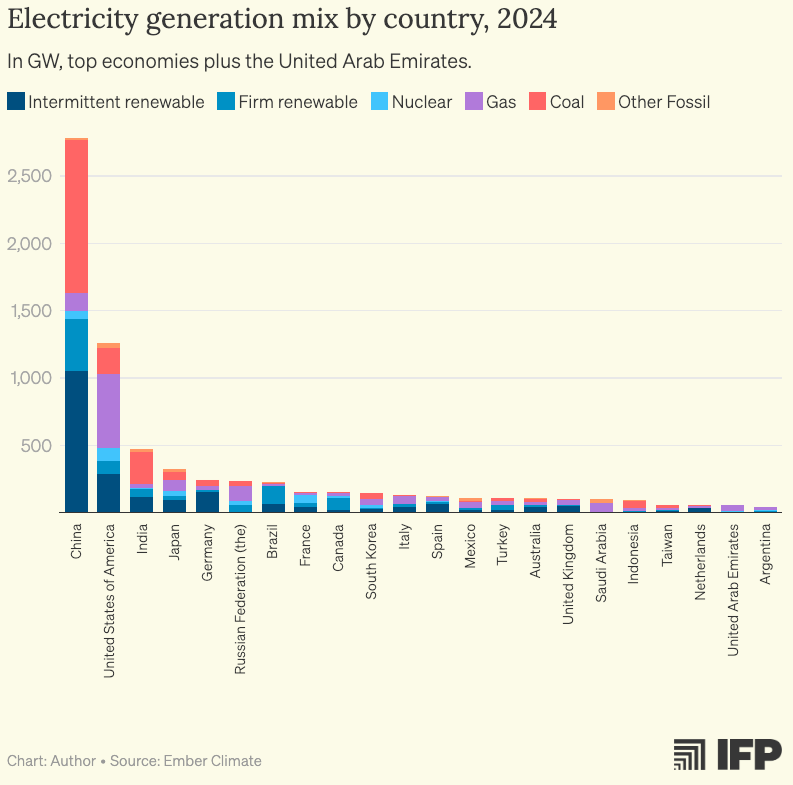

If the U.S. hopes to continue reshoring data centers—and operate them economically—we urgently need to get our energy strategy in order. Meanwhile, China is already pulling ahead on multiple energy fronts, as I discussed in my previous post:

“If you compare the electricity production capacity of the US vs China, the US has 1.1 TW of production capacity compared to China’s 3 TW. By 2050, China will have about 9 TW compared to our 3 TW. US energy generation at 4200 TWh annually has not increased since the early 2000s and costs three times more to build out energy capacity! Meanwhile, China has tripled its energy generation since 2005 and does not care about whether it generates clean energy; it only cares about generating more electricity. China is self-reliant on coal (with about 60% of its energy coming from it), but it also leads the world in renewable power installation. By 2050, about 90% of their energy will be through renewables. China also has about 20 nuclear reactors in the construction phase. They are planning to build 300 nuclear reactors and plan for these reactors to have 500 GW of energy capacity, about half of the current US energy capacity. Even though US industrial energy tariffs are slightly lower than those of China, China’s residential electricity tariffs are at $0.07/kWh, which is about half of US residential electricity tariffs. If the US does not get its act together in the next year or two, China will have the lowest industrial and residential electricity tariffs globally.

This highlights the massive challenges the US will face as we try to bring manufacturing and computing power onshore. If you believe the cost of power will drive the AI industry, the US is in deep trouble as China accelerates ahead with far more capacity and faster and cheaper capacity buildout.”

Now confirmed US Treasury Secretary Scott Bessent framed it best in his confirmation hearing when answering one of Oregon Senator Wyden’s questions: There is not a clean energy race. There is an energy race. I can’t agree more.

Anyone involved in advanced manufacturing in the U.S. knows we've steadily lost our status as one of the world’s leading manufacturing powerhouses due to decades of offshoring. This offshoring has contributed to stagnant domestic electricity demand since the early 2000s—but that's no longer true. Globalization is over, and the ability to domestically produce the most advanced semiconductors, ships, data centers, telecom equipment, batteries, electric vehicles, and military hardware has become an urgent national security priority.

We frequently emphasize the urgency of reshoring manufacturing, but none of that reshoring matters unless we can generate abundant, low-cost electricity. Energy pricing, especially for data centers with their massive AI clusters, becomes critically important. At a 500 MW scale, even slight differences in $/kWh pricing can amount to nearly a billion-dollar difference in operating costs. As data centers grow toward one to five gigawatts by 2027, that cost gap could balloon to tens of billions. Consider the Semianalysis chart below: hyperscalers building a 500 MW data center would save roughly a billion dollars by choosing Qatar, with its low electricity tariffs, over the UK, one of the most expensive markets globally.

The US Must Copy China: All-In On Accelerating Nuclear, Solar, Wind, and Energy Storage

Thus far, I've explored the three-headed hydra undermining the reliability of the U.S. electrical grid. First, inadequate infrastructure maintenance has left the grid dangerously vulnerable to extreme weather. Second, the rapid adoption of intermittent renewables like solar and wind is complicating utilities' ability to consistently balance supply and demand. Finally, energy consumption is surging to levels not seen since the early 2000s, driven by broad electrification trends and the explosive growth of data centers.

The solution to the first problem is straightforward in theory: we need more frequent and proactive maintenance of our existing grid infrastructure. In addition, we must accelerate the construction of transmission lines that span broader geographic areas. This would allow us to tap into diverse energy sources and improve the overall reliability of the grid.

Solving the other two challenges is far more complex. There’s no clear-cut solution yet for firming the grid and making it resilient to the rising energy demands ahead.

One option is to rely on low-carbon firm generation, with nuclear energy leading the pack. Other contenders include geothermal, fossil fuels paired with carbon capture and storage (CCS), and hydrogen—all of which could help reduce our dependence on natural gas peaker plants.

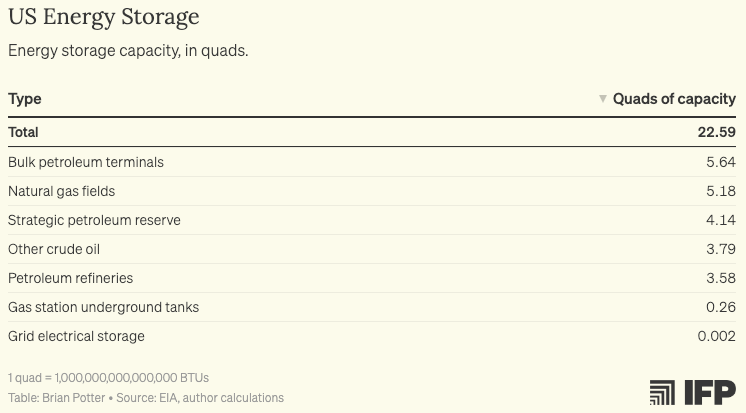

The alternative is to use long-duration energy storage (LDES) to store electricity for extended periods—long enough to substitute for firm generation. However, storing energy in the form of petroleum reserves, natural gas fields, or crude oil remains significantly cheaper than nuclear or LDES, leaving little economic incentive to shift away from traditional fuels. Today, large-scale electricity storage accounts for less than 2% of total energy storage capacity, and it’s dominated by pumped hydro (70%) and utility-scale lithium-ion batteries (30%).

It’s still too early to say whether firm generation or long-duration storage will become cost-effective enough to dominate. But that’s almost beside the point. The U.S. needs to execute three crystal-clear priorities for this decade:

Copy China.

Copy China.

Copy China.

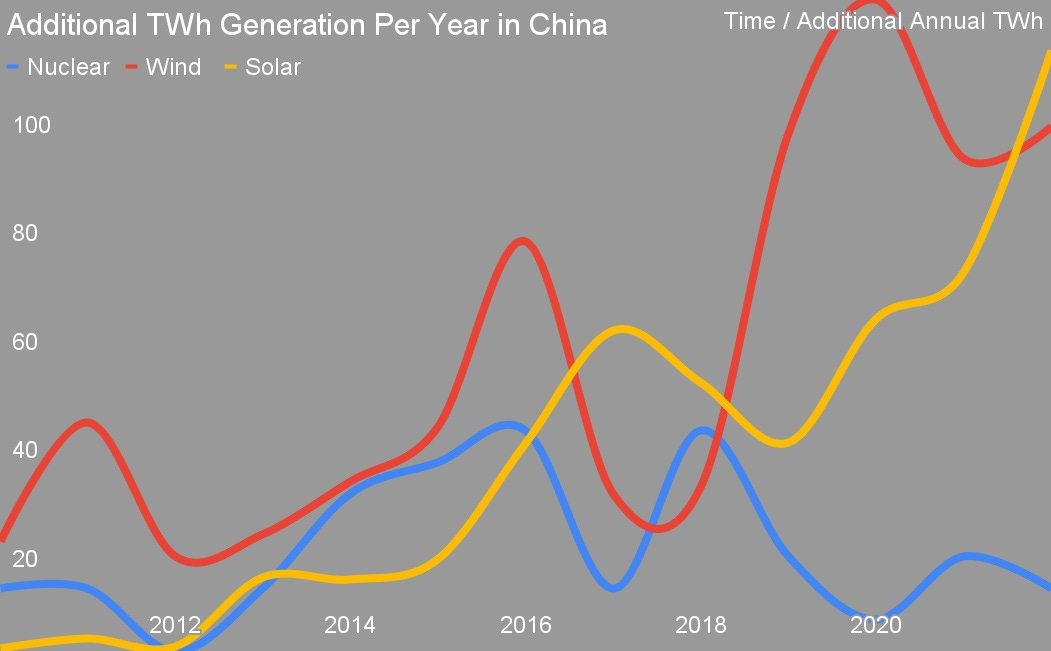

If it hasn’t been obvious throughout this deep (energy) takes series, China’s sole focus is rapid energy capacity growth and cheap electricity. They’re leading the global push to deploy next-generation nuclear reactors—and they’re adding five times more solar and wind generation (in TWh) than nuclear each year.

Meanwhile, the U.S. is either shutting down nuclear plants or facing massive cost overruns—like the Vogtle project, which is at least 100% over budget and nearly a decade behind schedule. While nuclear energy (will focus just on nuclear in a future post) could still play a valuable role in powering data centers or serving regions with limited land or sunlight, it’s unlikely to make up the bulk of the U.S. energy mix. Even with the promise of small modular reactors (SMRs) and microreactors, nuclear has yet to prove it can follow a learning curve. In contrast, solar and wind have been riding steep learning curves for years, reaching close to a marginal cost of zero.

When scaling solar and wind, long-duration energy storage is the only realistic path to making renewables the dominant energy source—at a sustainable cost. A 2022 McKinsey study on decarbonizing the grid with 24/7 clean power purchase agreements estimated that, without long-duration storage, relying on renewables and conventional storage like lithium-ion batteries would be at least twice as expensive as pairing renewables with long-duration storage. In my next deep (energy) takes post, we’ll explore which long-duration storage technologies are the most promising to adopt.